Docker is turning six this year. Yet many security teams lack a distinct strategy for addressing containers and oftentimes address container security separately from cloud. For security teams, it’s critical to understand the intrinsic link between public cloud and containers.

Containers + Cloud = Peanut Butter & Jelly

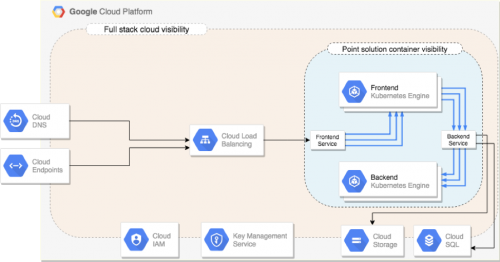

Computing has gone through several evolutions over the past two decades. I’ll save the history lesson for a future post, but suffice it to say, developers grew tired of dealing with operating system and application dependencies. Containers addressed this issue but then created a whole new set of security challenges. Market demand grew quickly and point security products – from commercial to open source – began to spring up. While these point products narrowly addressed some of the security challenges with containers, they completely missed the bigger picture. The majority of apps developed on containers utilize a mix of PaaS services like AWS Redshift, GCP Cloud Datastore and Azure SQL (see the diagram above, and you’ll get my point). Without a complete view into your cloud’s API layer, which knows exactly which cloud-native services are in use, how can your teams accurately assess the total risk containers add to your enterprise?

Consider a scenario where a fleet of containers are vulnerable to the latest CVE, but your AWS security group is not open on the port required to attack. Having this full stack security knowledge dramatically changes the risk equation but would be otherwise missed by container security point products. Why? Because they often do not have visibility into the cloud providers all-important API layer. This full stack knowledge allows your teams to address this vulnerability later while first remediating other vulnerabilities that are publicly exposed. The question then becomes, what are we missing with our current strategy that looks at container security in isolation from the rest of the cloud architecture?

Holistically Addressing Containers

The first step in correcting this is setting a clear goal so that your team knows what they are trying to achieve. This could be as simple as “enabling the secure use of containers through a combination of developer education, agreed upon security standards, automated enforcement of best practices and close integration with cloud provider native APIs.” The key here is that you are not limiting teams to buying yet another security tool but rather encouraging them to take a holistic approach that includes people, process and technology.

What does this really mean? Embrace DevOps and shift security left. Until your teams have integrated both security process and tools into the development lifecycle, it's not possible to have DevSecOps (the union of DevOps, security and shifting security as early in the development process as possible). And given the speed and velocity at which containers and cloud operate, there really is no other successful path forward for security teams. Once you’ve set a clear goal, teams will want to unleash their energy on the three key areas within the container lifecycle: build, deploy and run, each of which presents unique challenges and deserves close attention.

Wrapping It Up

With predictions of container adoption reaching 90% of enterprises in 2019, it’s critical that your team address them as a holistic part of your cloud security strategy. If you don’t have someone on your team focused on developing and implementing a cloud security strategy with containers as an integral part, you are likely looking at a skewed picture of the risks containers are adding to your organization. While it's always tempting to bolt on yet another point security product, the most mature organizations see cloud and containers as one and the same. Addressing container and cloud security separately will leave you blind to risks that an otherwise-integrated strategy would address.

Stay tuned for more in my next post.