This post is also available in: English (英語)

ChatGPT 是有史以來成長速度最快的消費者應用程式,僅在推出兩個月後就達到每月 1 億個活躍使用者。雖然這些 AI 應用程式可以大幅提升生產力和創造力,但是同時也對現代企業構成嚴重的數據安全風險。

許多企業可能會驚訝地發現,他們的員工已經在使用 AI 式工具來簡化日常工作流程,而這可能會將敏感的公司數據暴露於風險之中。軟體開發人員可上傳專屬程式碼以尋找及修復錯誤,而公司通訊團隊則可要求協助撰寫敏感的新聞稿。

為了防止不斷增加地將敏感數據洩露給 AI 應用程式和 API 的風險,我們很高興地宣佈我們將在新世代 CASB 解決方案中引入一系列新功能,以保護 ChatGPT 和其他 AI 應用程式,其中包括:

- 全面的應用程式使用情況可視性,可全面監控所有的 SaaS 使用活動,包括員工因使用新型和新興具生產力的 AI 應用程式而使得數據暴露於風險之中。

- 精細 SaaS 應用程式控制,可讓員工安全地存取業務關鍵應用程式,同時限制或封鎖對於高風險應用程式的存取 — 包括不具合法商業目的之具生產力的 AI 應用程式。

- 進階數據安全性,可以提供機器學習式數據分類和數據遺失防護,以偵測並防止動機善的員工無意間將公司秘密、個人可識別資訊 (PII) 和其他敏感數據洩漏至具生產力的 AI 應用程式。

Palo Alto Networks 如何防範 ChatGPT 數據洩露

若您是現有的 Palo Alto Networks Prisma Access 或新世代防火牆客戶,可購買 NG-CASB 搭售方案授權以立即啟動全新的 ChatGPT 功能。Palo Alto Networks 新世代 CASB 客戶現在可以提供可視性、控制使用量並防止敏感數據遺失,以防範數據洩露至 ChatGPT 的風險。

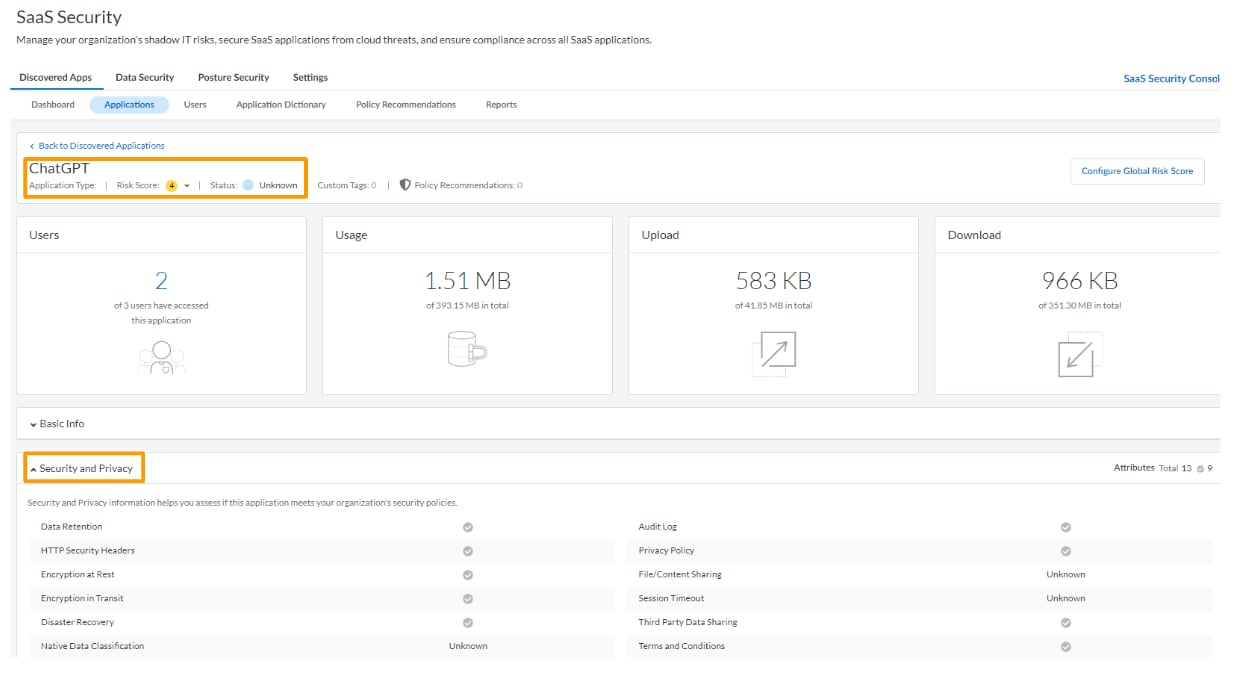

全面的應用程式使用情況可視性

現在,無論是新世代防火牆、VM-Series、Prisma Access 或 Prisma SD-WAN 的客戶都能取得對於其網路流量的完整可視性。ChatGPT 的流量是透過 App-ID openai-chatgpt 加以擷取,可利用防火牆的簡易搜尋功能進行探索。我們的新世代 CASB 客戶現在已能夠充分利用額外的應用程式層級安全性,以及對於 ChatGPT 隱私權見解。

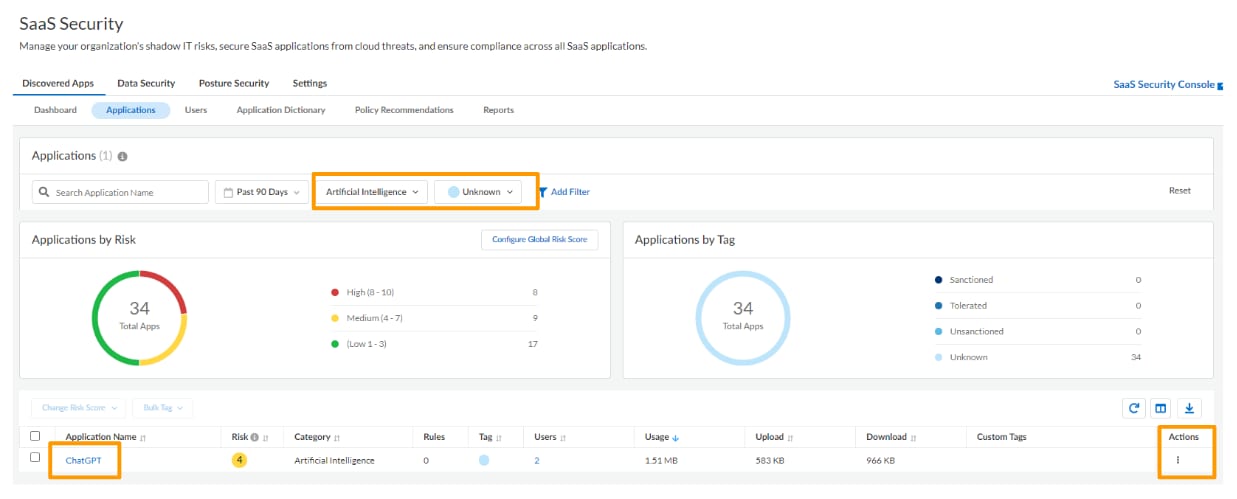

精細 SaaS 應用程式控制

目前包括使用者或使用者群組,以及應用程式功能都能精細地控制對於 ChatGPT 的存取。例如,您可以限制 ChatGPT 的存取並只開放給一小群具有權限的使用者,或明確地封鎖應用程式的傳訊功能和 API。

您可以使用 App-ID 控制以透過防火牆政策加以控制,甚至可以在新世代 CASB、Prisma Access 或新世代防火牆中使用新的「人工智慧」SaaS 類別來進行控制。

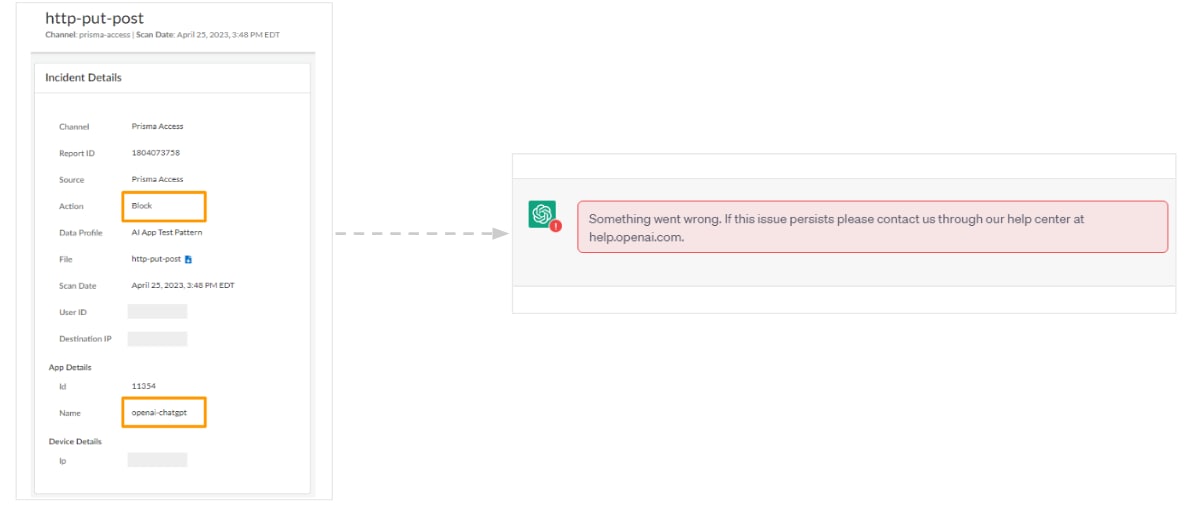

進階數據安全性

我們的企業數據遺失防護 (DLP) 解決方案可即時執行內嵌、機器學習式數據分類,以偵測並阻止敏感數據從您的網路流出。這可讓管理員制定內嵌數據遺失防護政策,同時識別並阻止敏感數據外洩至 ChatGPT 等具生產力的 AI 應用程式,而無需完全封鎖應用程式的存取。

由於 NG-CASB 與企業 DLP 可透過包括新世代防火牆和 Prisma Access 在內的整個網路安全平台進行交付,因此客戶可實施單一且一致的數據安全政策,以適用於辦公室內的使用者,以及居家或出差的員工。

您只需要將 NG-CASB 搭售方案新增至 Palo Alto Networks 新世代防火牆或 Prisma Access 部署,或是新增內嵌 DLP 並制定以上所述的政策,就可以輕鬆地協助企業防止敏感的數據洩露至 ChatGPT。

隨著新的具生產力的 AI 應用程式不斷出現,我們將持續擴充我們的應用程式目錄,為我們的客戶提供全面的數據保護。如需深入了解如何防範因使用 ChatGPT 等 AI 應用程式所造成的數據洩露,請與 Palo Alto Networks 聯絡以立即開始使用。